If you’re looking to unlock the power of AI video generation, you’re going to love this. In today’s tutorial, I’ll walk you through everything you need to know about using Wan 2.1 by Alibaba — an incredibly powerful and completely free AI video model that rivals the top tools out there. We’ll explore how to install it locally using ComfyUI, and how to generate text-to-video and image-to-video results step by step.

Whether you’re an AI hobbyist or a pro video creator, Wan 2.1 might just become your new go-to. Let’s dive in!

Why Wan 2.1?

Wan 2.1 is developed by Alibaba and supports high-quality AI video generation. Released under the Apache 2 license, it’s free for commercial use and integrates seamlessly with ComfyUI, a powerful open-source Stable Diffusion interface.

Wan 2.1 supports:

- Text-to-video

- Image-to-video

- Up to 720p resolution (with high-end GPUs)

To use it, you’ll need:

- A GPU with at least 8GB VRAM

- ComfyUI installed (setup covered below)

Installing Wan 2.1 + ComfyUI

Step 1: Install ComfyUI (If you haven’t already)

Head to the ComfyUI GitHub repository. Download the 7z file for Windows, unzip it, and run run_nvidia_gpu.bat (or CPU-compatible version if needed). Full ComfyUI installation is covered in our previous video.

Step 2: Download Required Files for Wan 2.1

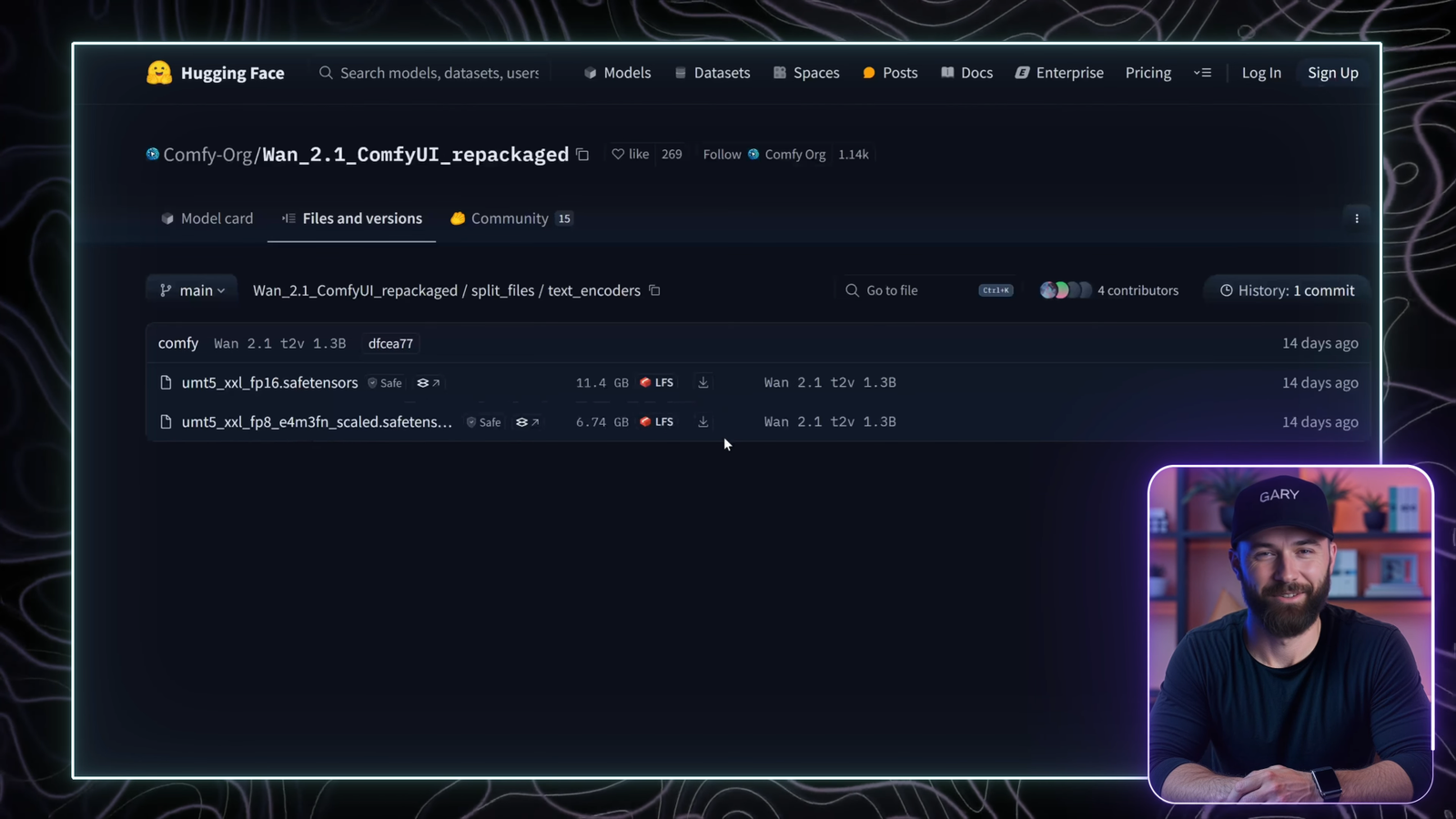

Text Encoder:

- FP16: Higher quality (larger file)

- FP8: Lighter and faster (recommended for low VRAM GPUs)

Save to: ComfyUI/models/text_encoder

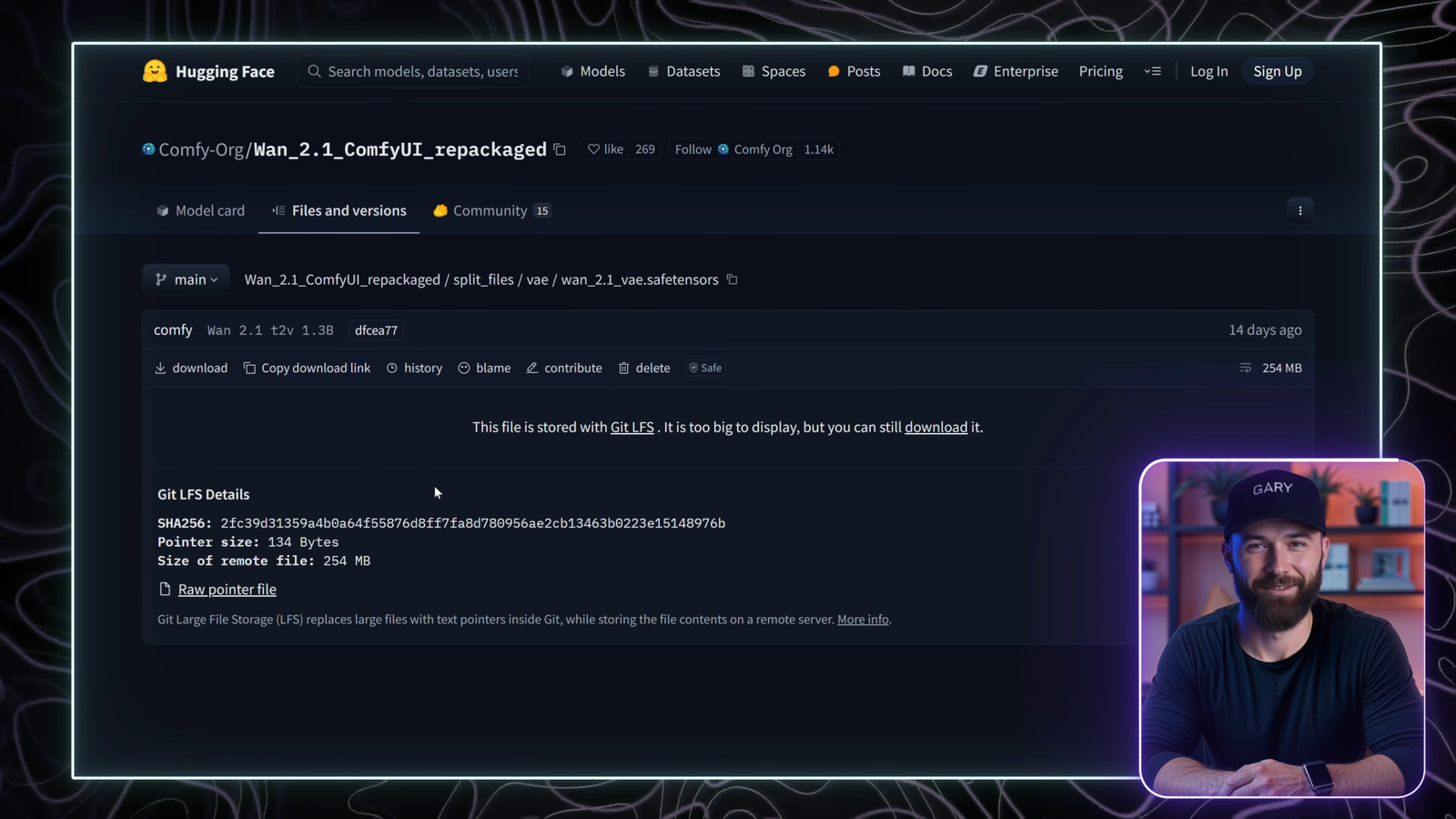

VAE File:

Download the 2.1 VAE and save to: ComfyUI/models/vae

Diffusion Model (Video Model):

Choose from:

- 14B model (720p, higher quality)

- 1.3B model (480p, lightweight)

Save to: ComfyUI/models/checkpoints

Text-to-Video Workflow

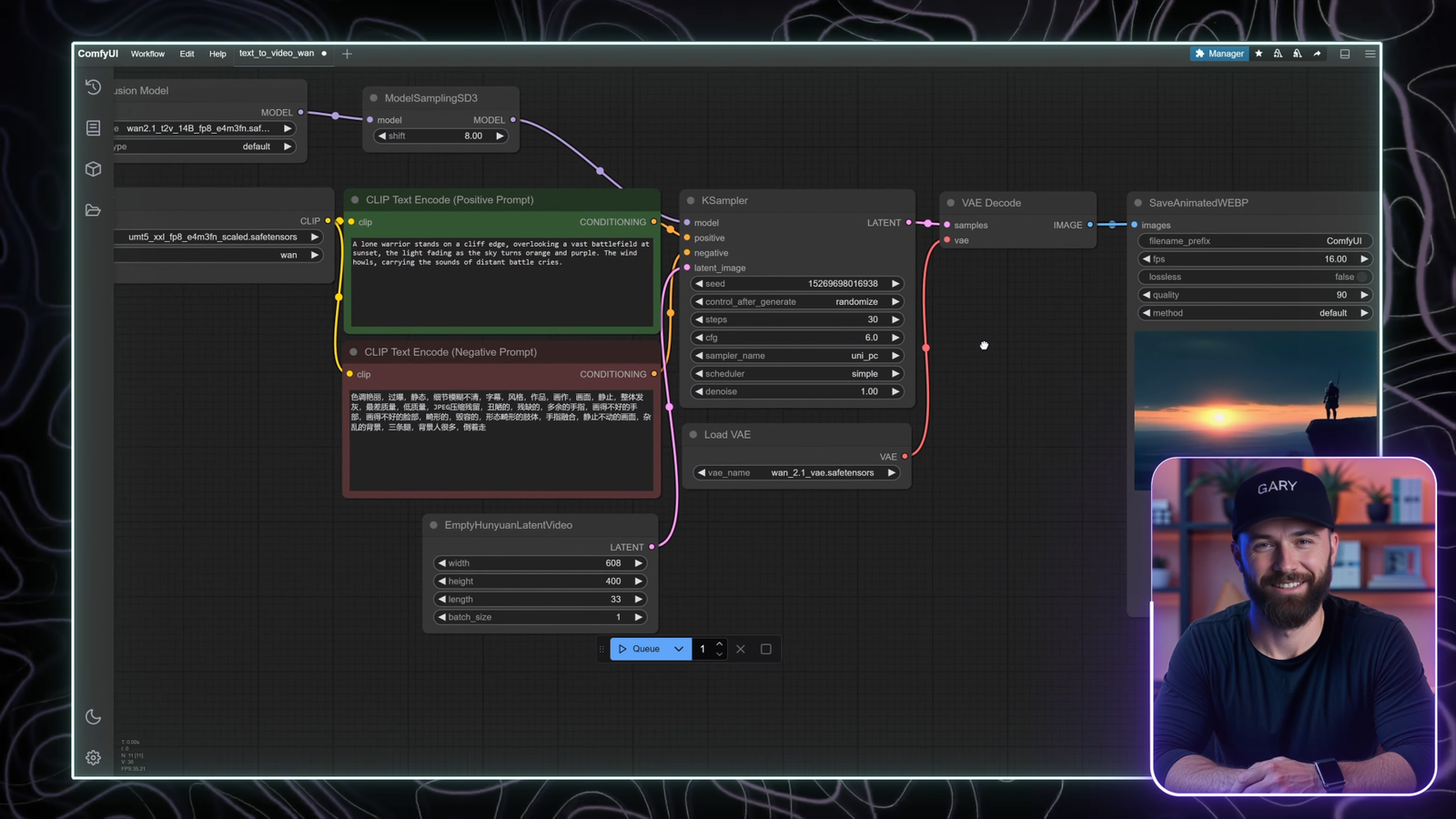

Step 1: Load Workflow

Download the pre-built JSON workflow file from the Wan 2.1 integration repo. Save it and drag it into ComfyUI.

Step 2: Configure the Workflow

- Model: Select the Wan 2.1 diffusion model (14B fp8)

- Text Encoder: MT5-1

- VAE: 2.1 VAE

- Resolution: 1280×720 (720p) or lower

- Frames: 33 (gives ~2 seconds at 16fps)

- Steps: 30 (balance of speed + quality)

- CFG: 7-10 depending on how literal you want the AI to be

Prompt Example:

Positive: A lone warrior stands on a cliff edge overlooking a vast battlefield at sunset

Negative: Blurry, distorted, artifacts, low quality

Generate:

Hit “Queue Prompt”. It will take time (e.g. ~5 hours for 720p with 30 steps). Lower resolutions (e.g. 600×400) can generate in 20-30 minutes.

Once complete, check ComfyUI/output folder.

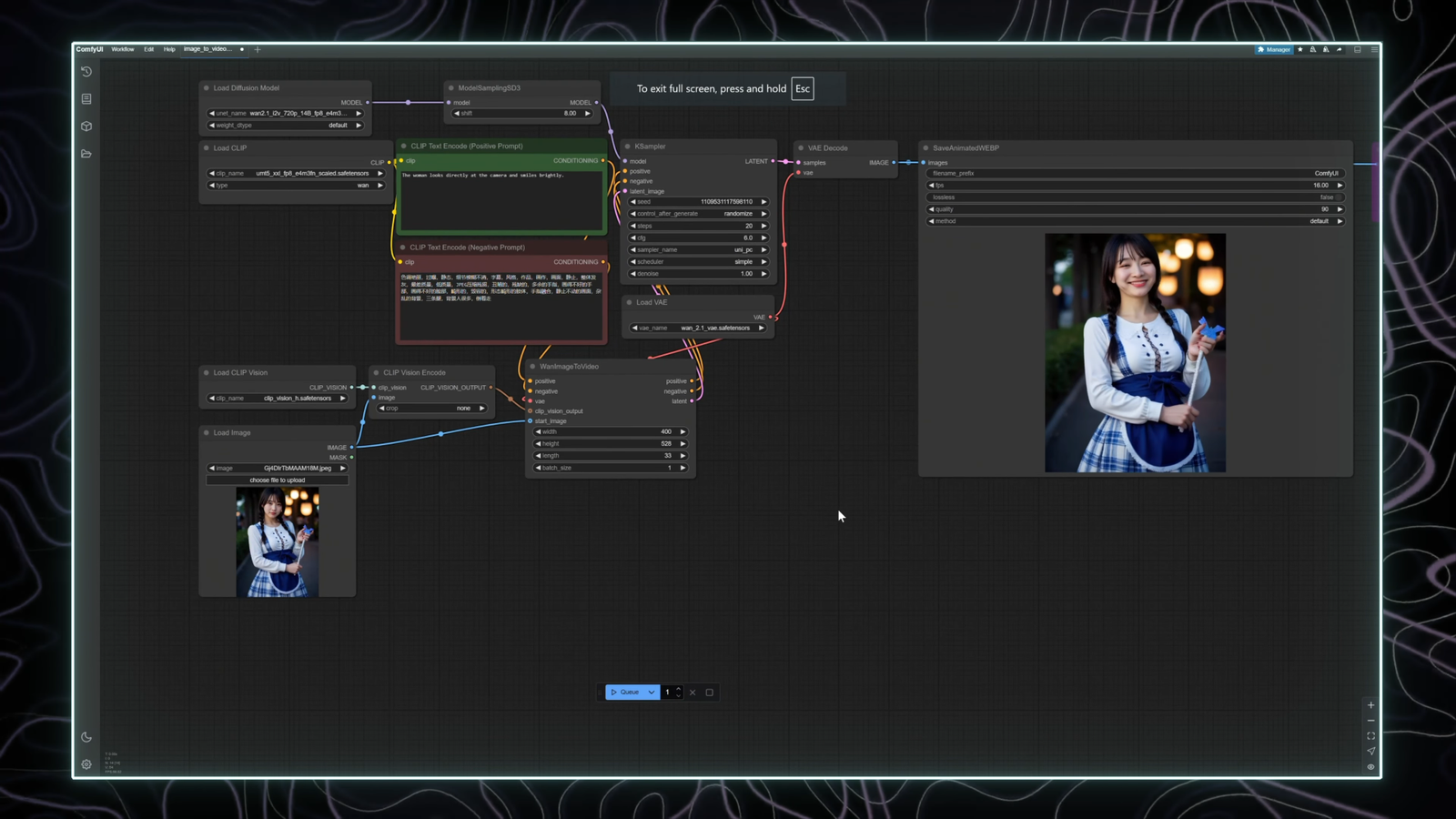

Image-to-Video Workflow

Step 1: Download Required Files

- Image-to-Video model (choose 720p or 480p, FP8 or BF16)

- Clip Vision model (for reference image)

Save to:

- Diffusion model:

ComfyUI/models/checkpoints - Clip Vision:

ComfyUI/models/clip_vision

Step 2: Load the Image-to-Video Workflow

Download and import the JSON workflow file into ComfyUI.

Step 3: Set Up the Workflow

- Image Input: Upload your starting image

- Text Encoder: MT5

- VAE: 2.1

- Clip Vision: Load the Clip Vision model

- Resolution: Match to input image (e.g. 512×512)

- Frames: 33 (~2 seconds at 16fps)

Prompt Example:

Positive: The woman looks directly at the camera and smiles brightly Negative: Leave default

Generate:

Click “Queue Prompt.” Wait for processing. Ensure your image dimensions match to avoid cropping or aspect ratio errors.

Workflow Tips

- Reusing Prompts: Save workflows for future use

- Seed Control: Set a fixed seed for consistent results

- Optimization: Reduce resolution or frame count for faster testing

📊 Comparison of Quality & Performance

| Tool | Quality | Speed | Price |

|---|---|---|---|

| Wan 2.1 | ⭐⭐⭐⭐☆ | Slower | Free ✅ |

| Sora | ⭐⭐⭐⭐⭐ | Fast | Paid 💰 |

| Kling AI 1.6 | ⭐⭐⭐⭐☆ | Fast | Pro version available |

| Hailuo AI | ⭐⭐⭐☆ | Average | Free ✅ |

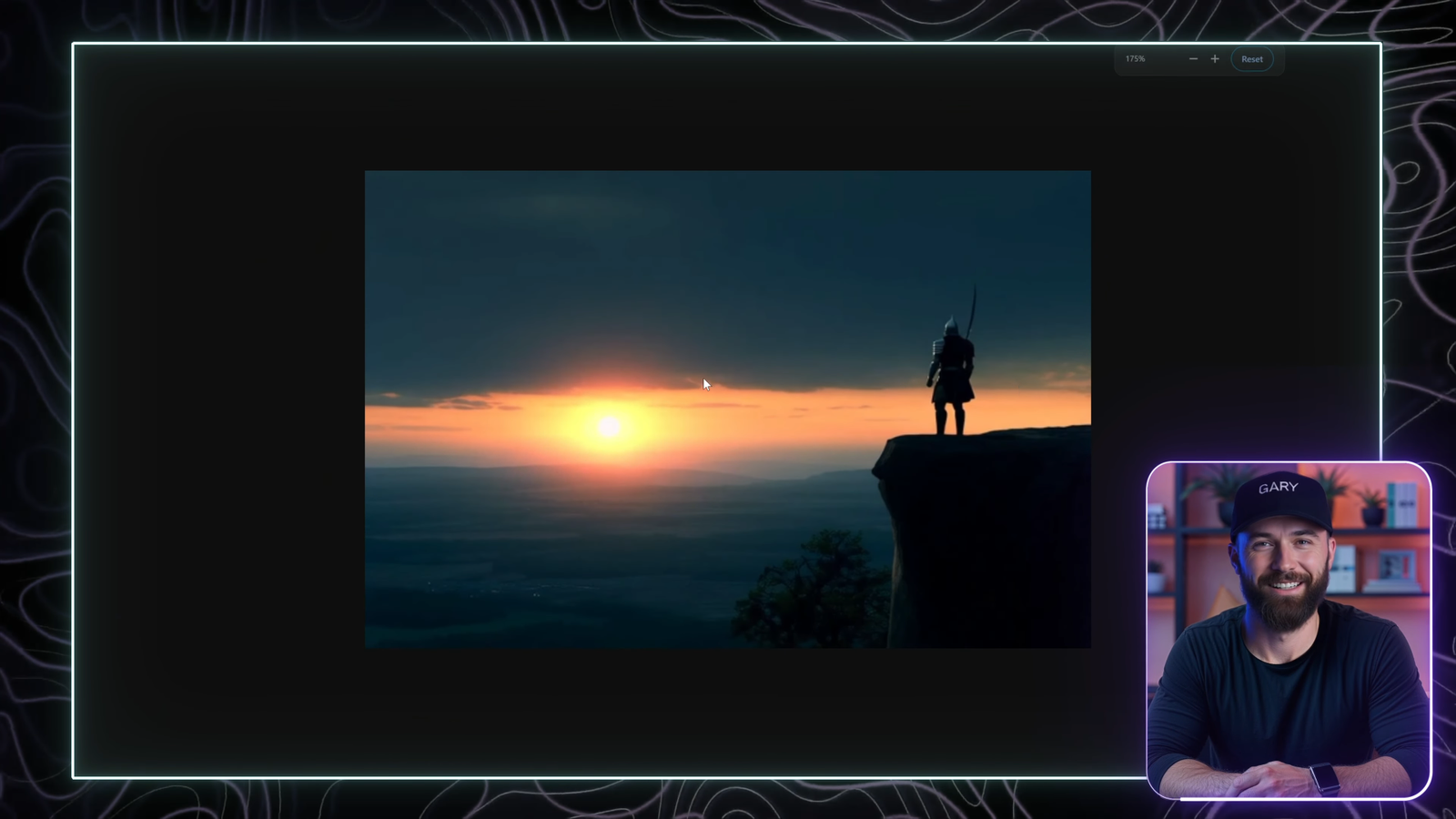

🎥 Wan 2.1 delivers beautiful and smooth results, especially for landscape scenes and static characters.

In testing, Wan 2.1 generated highly detailed video outputs. Compared to Sora, Kling AI 1.6 Pro, and Hailuo AI, it held its ground remarkably well — especially considering it’s free and open source.

720p generations took longer (up to 5 hours), but results were cinematic and stable. The 480p models provide a faster, more accessible alternative.

For example, the same scene generated in Sora and Kling AI rendered faster, but Wan 2.1’s output was stylistically comparable.

🤖 Summary: Why Use Wan 2.1 with ComfyUI?

- ✅ 100% Free (Apache 2 License)

- ✅ Commercial-use ready

- ✅ Local generation = full privacy

- ✅ ComfyUI offers modular control

- ✅ Competes with top-tier paid tools

If you’re an AI creator or video artist, Wan 2.1 is a no-brainer. With just a few hours of setup and experimentation, you’ll be generating custom cinematic videos on your own machine.

Stay tuned for more in-depth tutorials where I compare Wan 2.1 head-to-head with other tools.

If this guide helped you, don’t forget to like, subscribe, and leave a comment!

#AIwithGary #Wan2.1 #ComfyUI #AIvideogeneration #TextToVideo #ImagetoVideo #AItools #OpenSourceAI #AItutorial #StableDiffusion

Leave a Reply